Great power competition: who is winning the generative AI race?

Artificial Intelligence in general, and Generative AI in particular, have generated a major public discussion over the future of global competition and international leadership in the key geopolitical vector that technology represents. The competition has become a two-fold race: the race over the development of AI, and the race over the adoption thereof.

While competition is a relevant element, it does not exclude countries from cooperation. The United States and China had the greatest number of cross-country collaborations in AI publications from 2010 to 2021, although the pace of collaboration has slowed. The number of AI research collaborations between the United States and China increased roughly 4 times since 2010, and was 2.5 times greater than the collaboration totals of the next nearest country pair, the United Kingdom and China. However, the total number of US-China collaborations only increased by 2.1% from 2020 to 2021, the smallest year-over-year growth rate since 2010. Both competition and cooperation have increased in recent years, and refer to three levels: governments, private sector, and academia.

There is no segregated information on the governmental budget devoted to Generative AI with regards to AI in general. However, available data shows that the US federal government is devoting most share of funding towards decision science, computer vision and autonomy segments. In the three cases, generative AI plays an increasingly important role. The federal government has increased its budget on AI by more than $600 million year on year, up from $2.7 billion in 2021. Total spending on AI contracts has increased by nearly 2.5 times since 2017, when the US government spent $1.3 billion on artificial technology.

The recently released President Biden’s Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence, which has created a landmark in the US Administration to push forward the inclusion of AI in the public sector, makes reference to generative AI. Concretely, it displays the need to protect citizens from “AI-enabled fraud and deception by establishing standards and best practices for detecting AI-generated content and authenticating official content. The Department of Commerce will develop guidance for content authentication and watermarking to clearly label AI-generated content.” However, this reference is only made once.

However, this statement aligns with the –also released on the same day– G7 Leaders’ Statement on the first-ever International Guiding Principles and a voluntary Code of Conduct. Born from the Hiroshima AI Process, Germany, Canada, the US, France, Italy, Japan and the United Kingdom highlights the importance of engaging developers and agreeing on a common baseline of principles to develop AI, including generative AI. At the same time, Western countries have been focusing on generative AI further more –although still at a preliminary stage–, with the UK’s AI Safety Summit, whose theme is devoted to frontier AI risks and opportunities, including generative AI.

Alongside these Western-centric approaches to generative AI, China has been also developing certain initiatives. In the case of China, no specific data has been publicly shown either, but the proposal for a first-of-its-kind rules governing generative AI, announced by the government in July 2023, shows the level, scope and interest of the issue. The rules, developed by the Cyberspace Administration of China (CAC), will only apply to generative AI services that are available to the general public rather than those being developed in research institutions. Generative AI providers will need to obtain a license to operate, conduct security assessments on their product and ensure user information is secure, based on the “core values of socialism”, as acknowledged by the CAC.

Amid this scenario of nationally-led initiatives or minilateral coalitions, the United Nations has decided to take over its voice as shaper of policy discussions at the global level. In particular, the UN Secretary-General, which already launched the proposal for a Global Digital Compact to be approved during the Summit of the Future in September 2024, has set up a High-Level Advisory Body on Artificial Intelligence, whose two co-chairs are from Spain –an active EU member state in AI, and also the expected final Presidency to the EU Council to approve the AI Act proposal– and from Zimbabwe.

While there is less information on the governmental decisions to address generative AI, the existence of a growing market by the private sector shows the intensity and exponential growth –and, thus, competition– of this technology vertical by companies. In this realm, there is no single Chinese and US leader in generative AI. Depending on the topic, one or the other leads.

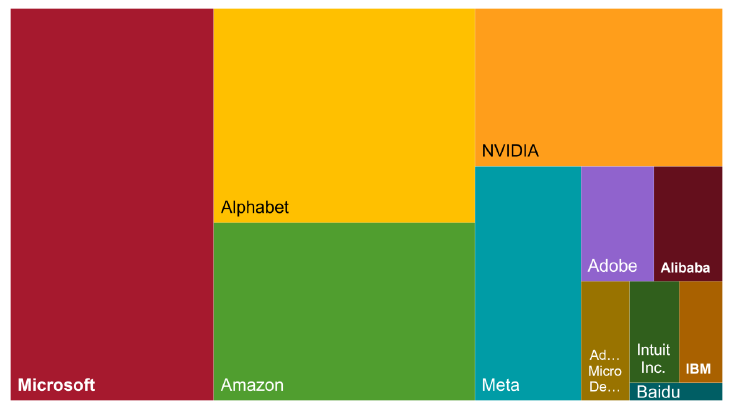

Top firms using generative AI: the US leads the way

As for top firms using generative AI, US companies are far ahead of Chinese firms and from other countries. Microsoft leads the usage of generative AI with a market capitalization of $2.442 trillion, followed by Alphabet ($1.718 trillion). The leading Chinese company is Alibaba Group ($241.97 Billion), which is on the top ten worldwide and ranks 7th.

Figure 1. Top 12 of market share of firms using Generative AI

Also, American firms also have a strong competitive advantage in the design and production of graphics processing units (GPUs). GPUs are a type of hardware that is the main power source for most Large Language Models (LLMs), a deep learning algorithm that can perform a variety of natural language processing (NLP) tasks. This provides major incentives and opportunities for innovation. There are other technologies that have strong interactions with generative AI, such as the US has a strong leverage in the cloud computing industry, which is essential for training and deploying generative AI models.

The ranking does not disclose the transformative power of generative AI in abolishing market barriers and strong clusters that end up producing gatekeepers. Even if large companies may have access to the best technology and technologists, generative AI is a field that is growing very quickly and may put some new players on the table. Smaller competitors may be building more adaptable and, most importantly, cheaper, and more accessible open-source AI models. If this document turns out to be true, AI is also fostering a bottom-up competition from smaller AI enablers that can generate even more innovation in the US and refrain Chinese actors from leading top firms in generative AI, due to two reasons.

First, because companies based in China need to abide by a greater number of rules, wielded by the government, and with a greater amount of oversight mechanisms on which type of content flows through generative AI processes, either on political, societal or cultural issues. Second, because Chinese companies might face a similar process as it happened with the “tech crackdown” on Chinese companies since 2020. Chinese authorities initiated a regulatory storm against the country’s Big Tech firms in late 2020 out of concerns that the country’s major internet platforms were experiencing a major growth and monopolistic behaviours. It started with the limitation of Alibaba’s AntGroup IPO in the United States and was followed by a greater number of norms limiting the international expansion of companies. After three years, the Chinese government announced in 2023 its strategy to maintain the “bottom line of development security” and strengthen its “linkage effect” with international markets. This might mean that the technology crackdown is declining. However, still generative AI companies might face greater level of dynamism in the US than in China.

AI has been one of the forefronts of US-China competition, but the effects do remain limited to the competition between these two. The EU, for instance, is falling behind, as no European firm was in the ranking. Kai-fu Lee, the ex-president of Google China and prominent venture capitalist and AI expert, told Sifted that Europe is not even in the running for “bronze medal” in the AI race. He determined that Europe has none of the success factors like the US or China, due to a lack of a VC-entrepreneur ecosystem, successful consumer internet companies, social media companies, or big-sized mobile application companies that can drive artificial intelligence advancements, and a lack of governmental support to win the generative AI race.

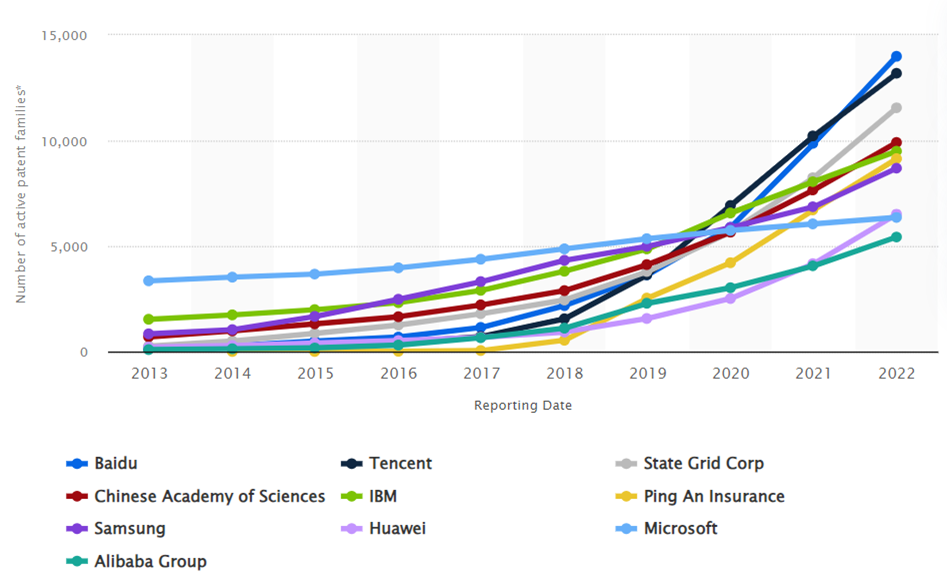

Top countries with the most AI Patents applications: issues with generative AI, intellectual property and China’s leadership

China has the most AI patent applications, with 4,636 applications or 64.8% of all patents requested globally. The US comes in second with 1,416 AI patent applications, making up 19.8% of patent applications with offices listed, followed by the Republic of Korea, with 532 applications. In 2022, China filed 29,853 AI-related patents, up from 29,000 the previous year. Beijing has accounted for more than 40% of global AI applications over 2022, mainly due to the monetization of AI products from top tech firms like Baidu and Alibaba. Since 2017, China’s patents surpassed the US ones. However, now they represent almost double the sum of the US. Concretely, China is leading the competition in patents with Baidu and Alibaba monetizing AI products. The countries that would follow the list are Japan and South Korea with a total of 16,700 requests.

Figure 2. Largest patent owners in machine learning and artificial intelligence (AI) worldwide from 2013 to 2022, by number of active patent families

The Chinese government considers its domestic patent market a key economic sector and has been leading it since 2021, especially now with generative AI being a growing market for more patents. Last year, the China National Intellectual Property Administration (CNIPA) released a draft of measures to downgrade the ratings of Chinese patent agencies that were following non-desirable and fraudulent patent schemes.

The Chinese Communist Party (CCP) supports its domestic companies in generative AI, as well as in other strategic industries through subsidies. Even if there are no state-owned enterprises in the AI industry, the CCP still influences market direction and collaborates with private companies through financial and regulatory leverage. For instance, iFlytek has received substantial government subsidies, even exceeding half of the company’s annual net profits, namely 258.18 million yuan ($37.7 million).

Europe is severely underperforming in terms of patents. According to the WIPO report, out of the top 167 universities and public research institutions for patents, only 4 are in Europe. Out of these 4 European public research organizations that do feature on the WIPO list of top AI patent fillers, the highest-placed is the German Fraunhofer Institute, which is ranked 159th, while the French Alternative Energies and Atomic Energy Commission (CEA) is in 185th position.

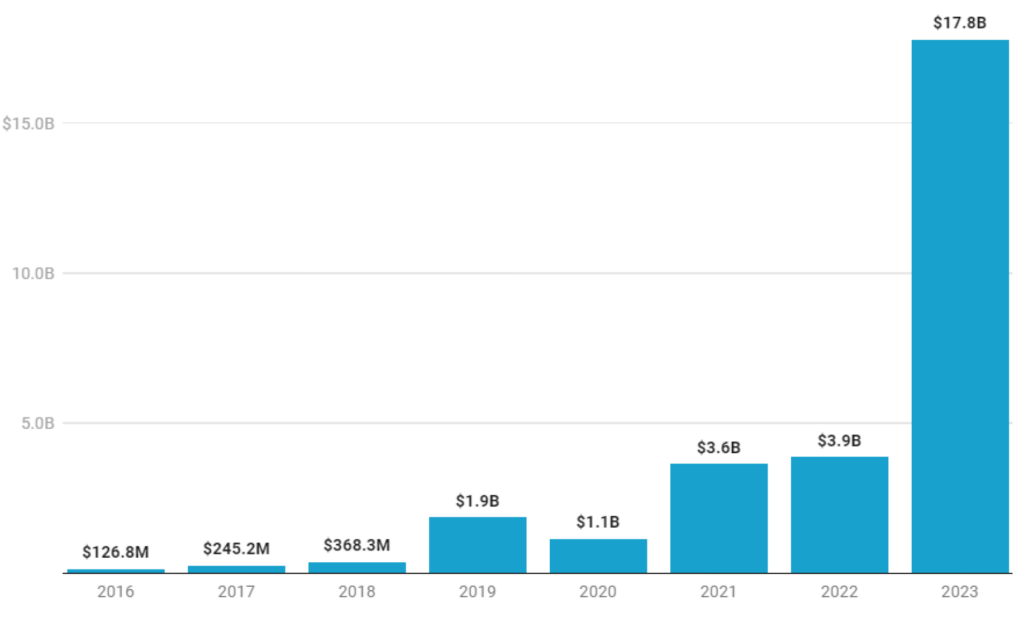

VC Investments in AI: comparison between countries

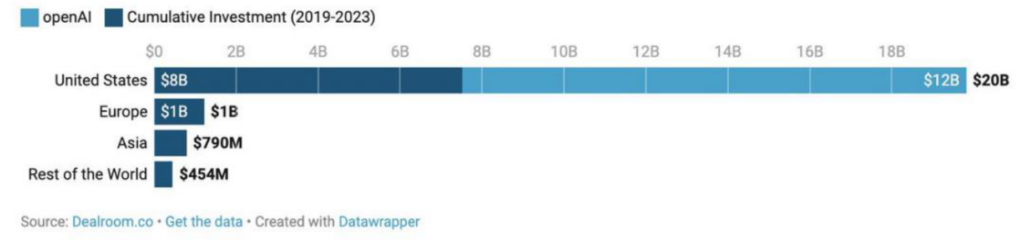

Since 2019, Generative AI startups have attracted over $28.3 billion in funding from investors. Only in 2023, it has attracted $17.8 billion from January to August. Generative AI startups have raised over $14.1 billion in equity funding across 86 deals in Q2 2023, making it a record year for investment in generative AI startups. Concretely, 89% of the global total ($20 billion) went to the US, making it the lead in VC Investments in AI.

Figure 3. Attracted funding to generative AI startups

Figure 4. Generative AI Venture Capital funding by regions

The success the US on generative AI startups and venture capital has to be contextualized with the role of the new players in the AI-generative market and the capacity to grab high-risk venture capital, concretely with the success of the US firm OpenAI. It is not only this firm. According to Forbes’ AI 50 list from April 2023, the top 5 generative AI start-up companies in terms of funding come from the United States. China, on the other hand, has been also investing in several generative AI applications. 22 generative AI start-ups in China received funding as opposed to 21 in the US and 4 in the UK. However, US start-ups received more funding in total. 12 of the 18 generative AI companies that received funding totalling more than 100 million yuan (US$138,287) in the first half were from the US, while only three were from China.

China’s government aim AI to represent 1 trillion yuan ($146 billion) to the country by 2030. While there are 14 unicorns worth a combined $40.5 billion on AI companies in China, still generative AI will need a greater level of investments to accomplish its goal of global leadership in this specific technology development.

European startups got just $1bn out of the €22bn that VCs have invested in generative AI since 2019, making up only 5% of this $22 billion to Europe. Asian startups got $790 million. The assessment of which country may become the leader in generative AI does not only stem from the number of top firms, publications or start-ups funding. It also derives from the flow of VC investments to certain high-priority industries, first, and who is the final recipient of this target investment. Concretely, the main target sector of US investments on generative AI (more than of 50%) flow into the media, social platforms and marketing sectors.

The second largest sector is the IT infrastructure and hosting, followed by financial and insurance services, digital security, travel, leisure and hospitality, and government, security and defence to a lesser extent. It is remarkable to say that US stakeholders invest almost 80% of generative AI funding in these two sectors within their own country –and not abroad.

On the other hand, Chinese stakeholders also invest in generative AI for high-priority sectors within their home country. Mostly, they invest in IT infrastructure and hosting; healthcare and biotechnology; business processes and support services; financial and insurance services; digital security; and media, social platforms and marketing. This scenario reflects that China and the US are similarly targeting media, social platforms and marketing, as well as IT infrastructure and hosting as the main priority sectors on generative AI investments, and they do invest in these to strengthen their home countries’ industries. They do diversify other low-profile investments into other sectors, but are not significant.

The implications of generative AI for security, economy and rights

Global security and defence

Generative AI poses both opportunities and risks for security and defence. On the one hand, it has been a valuable source on defence policy and planning. In August 2023, the US Department of Defense (DoD) launched Task Force Lima in order to integrate AI on national security by both minimizing risks, and leading innovation in the future outcomes in defence.

NATO has also initiated preliminary discussions over the potential impact of Generative AI. Concretely, the NATO’s Data and Artificial Intelligence Review Board (DARB), which serves as a forum for Allies and the focal point for NATO’s efforts to govern responsible development and use of AI by helping operationalise Principles of Responsible Use (PRUs), hosted a panel briefing on generative AI and its potential impact on NATO to weight the capabilities and limitations of generative AI. One of the main conclusions was that generative AI stack is not yet highly comprehensive in reasoning and planning to meet the critical functions required by the military.

While this panel briefing has an informational goal, it remains of high interest to assess which will be the final output from DARB on this issue. DARB is in charge of translating these principles into specific, hands-on Responsible AI Standards and Tools Certifications, and provides a common baseline to create quality controls and risks mitigation mechanisms.

Another challenge for security and defence is the impact of generative AI on intelligence analysis. AI enhances situation awareness and geolocation through various open data sets (OSINT), as well as decision-making processes and scenario-planning such as wargaming or foresight analysis. However, one challenge derived from generative AI is that it has so far tended to be fed by open-source data and platforms, what makes harder for intelligence communities to make use of it as a trusted tool.

If we focus specifically on US-China competition, 3 out of 5 of the world’s top five commercial drone brands are Chinese, while just one is American. More concretely, DJI’s market share is expected to grow from $30.6 billion in 2022 to $55.8 billion by 2030, while it has 70% of the current market value in the whole drone industry. The growing utilization of generative AI in drones might create an additional race for the development of these technologies on the battleground. For instance, one of the leading AI firms in the world is DJI from China, which has 70% of the global share of the drone market with a value of $33bn.

However, this potential usage of generative AI also conveys a number of risks, threats and challenges that might influence in the eventual decision of using generative AI to make security– and defence-oriented decisions. Data overflows may pose a challenge for security and defence policymakers when working with private companies that provide solutions to their services and projects. If a company makes use of open-source generative AI, the code sent to the GitHub service that manages this information flow could contain a company’s confidential intellectual property, and sensitive data such as API keys that have special access to customer information.

Markets and economy

President Xi Jinping considered AI a technology in which China had to lead, setting specific targets for 2020 and 2025 that put the country on a path to dominance over AI technology and related applications by 2030. Generative AI is nothing different. The creation of generative AI start-ups ecosystems across countries is gaining relevance, as it has been shown. However, generative AI poses new questions on the table for the global economy.

First, in global trade, generative AI may be understood as both an enabling tool and an end-goal. As an enabling tool, it may detect large language models of data on transactions, documents and contracts, to identify anomalies, fraud risks, and compliance issues with regards to money laundering, economic sanctions and tax havens. AI may create a new line of opportunity for global trade, although data shown in previous sections displays that so far countries have been investing in this technological asset on a domestic basis, with limited cross-border flows.

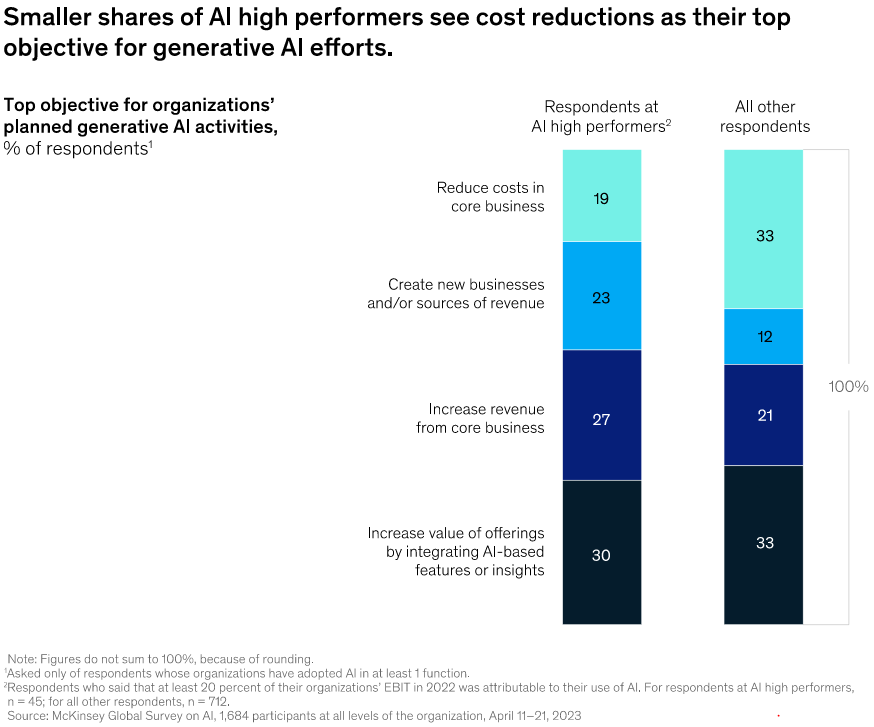

Second, generative AI may lead to two trends. On the one hand, companies aim to leverage generative AI internally in their companies to reduce costs of partnerships with third actors. According to McKinsey, 33% of organizations aim to use generative AI to reduce costs in core business (what might reduce the diversification of partners and suppliers from other industries and countries), and 12% aim to create new businesses and/or sources of revenue.

Figure 5. Top objective for organizations’ planned generative AI activities, 2023

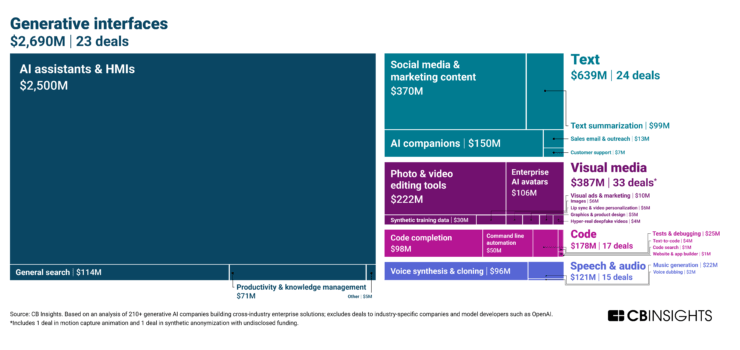

On the other hand, generative AI infrastructure will require cross-industry collaborations. Even if a company aims to reduce its costs of partnerships with third countries, become much more competitive and create higher revenues on its own, still the creation of the infrastructure requires to set up deals with other industries to make that goal feasible. According to CB Insights, the creation of this infrastructure is leading to cross-industry collaborations ($4 billion, 116 deals) more than industry-specific collaborations (only $0.3 billion, 45 deals). This two-fold assessment means that generative AI will create new layers of competition across companies but will also undoubtedly lead to greater levels of needed cooperation across sectors to have the technological edge over others.

Third, generative AI may create new grounds of competition and cooperation across sectors and products. For example, AI-enabled fintech companies are an increasingly important sector. With generative AI, they might see strong upsurge in their global positioning as a relevant industry. The more support a country provides to the establishment of existing, yet-to-be-top ecosystems, the more successful and competitive they will be in the global arena. As of 2023, Tencent’s WeChat Pay, the main fintech services provider in China, has over 1.2 billion users – of course, only operating in China. In contrast, Apple Pay has over 500 million users worldwide, with no less than 30 million being from the US. The UK is an interesting case as well. Founded in London in 2015, Revolut now has over 30 million retail customers worldwide and 6.8 UK users, having added over five million new users globally since November 2022. Lastly, Klarna from Sweden, if we move to the EU, has over 4 million monthly users –however, it leaves the picture of the EU with a big gap in AI-enabled fintech compared to other blocs.

Biometrics and facial recognition are another area where generative AI might be used and competition may be present. Biometrics can be understood as the identification of people using their physical or behavioural traits, and it has a strong dual-use aspect which can be used to control population or even use deep fakes and deception techniques through generative AI. Synthetic biometric data like fingerprints or our faces can be produced using generative AI, which can lead to breaches of personal information, data retention and overall trust in AI-enabled security systems.

Fourth, global economic competition –and cooperation– will not only be based on leading industries. It will also lie in the specific applications companies may utilize. The greater a country encourages private companies to invest in AI assistants and HMIs, the most competitive it will be. However, if all companies focus on the same generative interface, they will lose competitiveness. This is why some countries are promoting another interface-specific leaderships, for example India in code completion and voice synthesis.

Figure 6. Distribution of generative AI funding, Q3’22 – Q2’23

The US Federal Trade Commission is still struggling to see how they can best achieve both the promotion of fair competition and the protection of citizens from unfair or deceitful practices. Market concentration and oligopolistic tendencies can also happen, as largest firms are the ones who also have the access to more data, talent and capital in order to deploy AI-generated content. As the ranking exposed, the most powerful firms in AI come from the US. These firms are also the technological global markets that seem to control all the necessary “raw materials” for deploying AI-big bulks of data storage, strong computing power and cloud services, as well as the world’s leading AI researchers and investments.

Likewise, an increasingly important, revamped topic is the mechanism of using state aid. China, the US, the EU, India and other technological powerhouses are increasing the amount of subsidies devoted to strategic technologies, either to reduce the dependence on third countries which pose a high-risk in case of supply chain disruption or shock, or to become a frontrunner in this specific technological vector.

China is developing Chinese Guidance Funds (CGFs), among other cases. These public-private investment funds are a meeting point between the Chinese business community and government, as the latter pours money to mobilize massive amounts of capital in support of strategic and emerging technologies, including AI. The US has engaged in state aid promotion for critical technologies such as semiconductors, as the EU has done similarly.

However, jointly with subsidies, a critical point is how countries frame export control regimes. For instance, the restriction of exports towards a certain technology may hinder the development in other sectors, such as generative AI. According to Albright Stonebridge Group, US restrictions limit access to some types of advanced semiconductors, which are necessary for the greater compute requirements that future LLMs will need. If Chinese companies cannot access the Global Processing Units (GPUs), they will face challenges to develop LLMs as quick, comprehensive and complete as Western firms, thus limiting its share in the global marketplace.

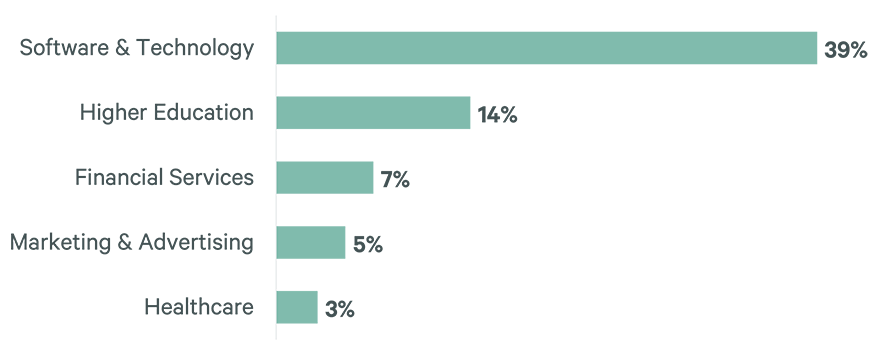

The presence of a highly skilled workforce in AI in general, and in generative AI in particular, remains strategic. In 2021, Quad partners Australia, India, Japan and the United States announced the Quad Fellowship, a first-of-its-kind scholarship program to build ties across STEM experts from four countries. AI is one of the top-priority areas for these talent exchanges, which include scholarships for PhD, master’s, and talent exchanges across companies. This is of particular interest because the US has been long retaining international US-trained AI PhDs staying in the country, including those from AI competitors such as China. Particular sectors in generative AI, on the other hand, employ the greatest share of US talent, and this trend might increase in the next years.

Figure 7. Sectors that employ the greatest share of US AI talent, 2023

By contrast, other studies have found that the majority of China-trained AI talent currently lives outside China. Estimates indicate that its need for workers skilled in AI is expected to grow sixfold by 2030 (from one million to six million). Due to this gap, universities and research centres are participating in a large range of government-backed talent programmes to attract and retain AI talent.

Rights and global governance

Generative AI has received attention from the perspectives of rights and global norms. The European Parliament, which is long drafting its AI Act proposal, interrupted one of the final stages of the proposal due to concerns over those loopholes that might remain outside of the AI Act if not covered properly.

Likewise, Spain, the United States and the United Kingdom launched the OECD Global Forum on Technology, a platform for dialogue and cooperation on digital policy issues, where generative AI had a prominent role and was a top priority. The Forum has developed a set of principles on AI from a humanistic approach in order to foster trustworthiness, fairness, transparency, security, and accountability, among other values. The OECD’s perspective on generative AI and digital rights is based on the idea that these technologies should be aligned with human values and serve the public interest. The Global Partnership on AI (GPAI) has developed some policy reports on the potential impact of generative AI on certain global issues, such as the future of work.

The Internet Governance Forum (IGF) has addressed generative AI in the last IGF in Japan in October 2023. The IGF’s perspective on generative AI and digital rights is based on the global idea that these technologies should be governed by all states. The UN agency, the International Telecommunication Union (ITU), approaches generative AI under the umbrella of the Sustainable Development Goals (SDGs) and bridging the digital divide.

It looks like, even amid global competition for generative AI, most international forums are increasing their positions and views on governing AI under a humanistic view.

The role of the EU in the global implications of Generative AI

While the EU’s position in the economy of Generative AI is less prominent and significant than that of China’s and the US, the EU has caught up on the potential security and rights challenges.

Regarding its economic position, there is no single European generative AI start-up or company on the top list of firms worldwide. Venture Capital culture is limited in Europe, and there is an important lack of commercialization of research and development towards the market.

Generative AI, economic security and critical technologies

The European Commission proposed in the summer of 2023 the first-of-its-kind Economic Security Strategy, which aims to address the economic security risks derived from certain economic flows and activities that may remain vulnerable or threatened in the current scenario of geopolitical tensions and accelerated technological development.

The European Economic Security Strategy is based on a three-pillar approach, or three Ps: promotion of the EU’s economic base and competitiveness; protection against risks; and partnership with countries with shared concerns and interests. The four areas that require risk assessment are: resilience of supply chains, including energy security; physical and cyber-security of critical infrastructure; technology security and leakage; and weaponization of economic dependencies and coercion.

Concretely, one of the first deliverables has been the list proposal on critical technologies by the European Commission, which encourages Member States to provide their risk assessments and lead to a collective work to determine which proportionate and precise measures should be taken to promote, protect and partner in specific technology areas. The goal is two-fold: to reduce dependencies from third actors whose supply chain and political security may be of high-risk, and to promote a diversification of strategic assets across the Union and with trusted partners.

Out of this list proposal, which contains 10 technology areas, the second priority is Artificial Intelligence. The technologies listed for this area, that should be assessed, but are not exhaustive and may include new ones, include high-performance computing, cloud and edge computing, data analytics technologies, and computer vision, language processing and object recognition. Most of these listed technologies have an immediate interaction with Generative AI, either because Generative AI helps them to improve or to optimize their solutions, or because the former depends on the latter to get developed and run.

From a practical perspective, several pressing challenges arise with regards to this list proposal of critical technologies and the embedding of Generative AI. First, this list is aligned with President of the European Commission’s de-risking strategy. Its goal is not to promote an inward market of endogenous manufacturing or keep away from global supply chains (decoupling). The objective is to promote global trade at the same time the EU reduces its dependencies from high-risk third actors and guarantees strategic assets’ diversification with trusted partners (friend-shoring). While the de-risking approach was initially criticized by some countries that considered that EU’s approach to China was not assertive, eventually the United States accepted this discourse and the National Security Advisor, Jake Sullivan, has reiterated it during 2023.

However, from a hands-on approach, the reality is that the embedding of generative AI through a de-risking approach may prove to be hard, at least at present times, because this technology application still has several security loopholes and risks –as mentioned in previous chapters– that make complex a real-time, actual, comprehensive monitoring of the potential challenges it may represent for global security and economic resilience. The EU-US Trade and Technology Council has been working since 2021 on how to develop joint early warning and monitoring systems for certain technologies such as advanced semiconductors, but this monitoring process requires a high level of existing data, due diligence compliance and the establishment of fluid conversations and partnerships with the private sector that designs, produces, and deploys these systems.

Additionally, it remains to be known the actual effect of export control regimes led by the United States, and joined by the Netherlands and Japan, on certain semiconductors and AI components towards China. As stated in previous sections, the restriction over semiconductors in China would limit the access for Chinese companies to GPUs that are essential for Large Language Models.

Related to the latter point, one of the reasons why the Economic Security Strategy and the list of critical technologies were proposed was due to the identified lack of a comprehensive, fully-fledged coordination and cooperation across EU Member States on technology issues. While the Netherlands’ decision to join the US-led export control regime is valid under the European typology of shared and exclusive competences, it was a display for EU institutions on how much needed an actual implementation of collective measures is.

When it comes down to generative AI, the main question will be how each Member State, when developing their national risk assessments to be sent to the European Commission before the end of 2023, will address generative AI: as a security risk, threat or challenge; as a purely economic issue; or as a topic that needs to be addressed only through regulation (namely, the AI Act proposal). As it has occurred with other proposals, such as 5G Cybersecurity Toolbox, Member States may have different political, security and market approaches to the same issue.

A relevant point is how to address generative AI and economic security with regards to China. Neither the strategy nor the list proposal mention the country explicitly. Some Member States look forward to a greater assertive approach to China, while others prefer to not do so.

Generative AI, the Brussels effect and EU’s regulatory powerhouse

The European Union has become a worldwide benchmark for a large part of technology-related regulations. Throughout the early development of the AI Act proposal since April 2021, generative AI was never mentioned. However, the increase of usage of generative AI since the end of 2022 has made actors involved in the proposal to include generative AI as a relevant issue.

Concretely, the European Parliament has proposed that generative AI systems are subject to three levels of obligations: specific obligations for generative AI, specific obligations for foundation models, and general obligations applicable to all AI systems.

The EU has been long flagged as the regulatory powerhouse when it comes to technology policy. The ‘Brussels effect’ phenomenon has been referred during several years to explain how EU’s regulation influences in how third countries apply their own technology legislation with a similar approach. In the case of generative AI, it remains to be seen which scope and depth generative AI will receive in the AI Act proposal.

However, an interesting development is how the EU and some of its Member States are promoting a prominent voice in the global technology governance dialogues. Particularly, the Spanish government has launched, jointly with the United Kingdom and the United States, the OECD Global Forum on Technology, where generative AI was one of the two top priority topics. Likewise, the Spanish Presidency to the EU Council –which is expected to wrap up the AI Act file during the final semester of 2023– is pushing towards including generative AI as an economic factor of opportunity and as a topic that needs to guarantee and protect fundamental rights.

Generative AI, the foreign policy of technology, and multilateralism

The EU does not only address technology policy through regulation, market and security considerations. Technology has become an asset of foreign policy and a relevant topic discussion in multilateral and minilateral fora. Two main frameworks help explain how the EU might push generative AI as part of the global discussions.

First, the Global Gateway, launched in December 2021, and which is the EU major investment plan in infrastructure development with third countries, puts the digital pillar as its first priority. Concretely, the work with partner countries focuses on digital networks and infrastructures, including Artificial Intelligence. Generative AI is not mentioned in the communication, partly due to the period of time when it was published. With the lengthy time it has taken to get projects released, it is of low likelihood that generative AI will be a top priority in the Global Gateway, as there are other technologies with a greater level of capacity maturity, a greater opportunity of investments, due diligence compliance and trusted partnerships with third countries, and with a long-lasting background of public-private partnerships in those technology areas. However, generative AI is a topic to not be overlooked.

Second, the Council of the European Union approved in July 2022 the first-ever framework on EU’s digital diplomacy. The European External Action Service got the approval to frame, through a formal action plan and operational initiatives, all international technology partnerships that the EU was running. This would be undertaken with a higher level of coordination, under a same umbrella, and with a much more diplomacy-oriented arm, consisting of networks of flagship EU Delegations with technology policy as a top priority issue in their negotiations and activities.

Concretely, the EU has been so far developing several partnerships with third countries. The EU-US Trade and Technology Council, initiated in 2021, devotes a specific working group on Artificial Intelligence. Both partners announced in December 2022 the launch of the Joint Roadmap on Evaluation and Measurement Tools for Trustworthy AI and Risk Management, whose implementation plan contains short– and long-term goals. Goals mainly cover the following areas: the establishment of inclusive cooperation channels, advancing shared terminologies and taxonomies, conduct an analysis on AI standards and identify those of interest for cooperation, development of tools on evaluation, selection, inclusion and revision, and the setting-up of monitoring and measuring systems of existing and emerging AI risks.

One of the first deliverables has been the launch of an initial draft of AI terminologies and taxonomies, by engaging stakeholders. It has identified 65 terms that were identified with reference to key documents. The main goal is to move forward a common vision on how to govern AI and how to move up agendas to the multilateral settings of negotiation. Apart fort the expert working group on terminologies, other two working groups are ongoing: on standards and on emerging risks.

Generative AI systems have received attention in the TTC. Concretely, in May 2023, during the TTC in Luleå, Sweden, a panel discussion was held on large AI models, following the “recently witnessed acceleration of generative AI”. The main output was for both the EU and the US to foster further international cooperation and a faster global approach to AI.

The EU counts on other international technology partnerships. However, the reference to generative AI has been far limited. First, because the level of depth and scope of topics in other dialogues are not as broad as in the transatlantic TTC. In some cases, the EU addresses the approach to AI with third countries in terms of ethics, definitions and taxonomies, For example, the Japan-EU Digital Partnership Agreement addresses similar topics, such as global supply chains, secure 5G, digitalization of public services, digital trade, global and interoperable standards, and digital education. It also addresses the topic on safe and ethical applications of AI.

However, there have been no major developments or joint declarations on this issue, except for their common membership to the G20 (the EU as a participant with no voting rights). The declaration on the EU-Republic of Korea Digital Partnership Agreement points out to the need to discuss definitions, use cases, high risk AI applications and response measures, and facilitate cooperation towards relevant fora, such as the GPAI and OECD.

In other cases, the EU addresses the cooperation on AI through the lens of technical implementation. For instance, the focus of the EU-Singapore Digital Partnership Agreement is on interoperability, cooperation on AI testbeds and testing, cross-border access for AI technologies and solutions, capabilities, and references to trustworthiness, adoptability and transparency.

A relevant, growingly increasing reference is the cooperation on AI in international forums, where both sides may move up common agendas to these settings. Japan and Korea’s partnership agreements refer to it. Likewise, the EU-India Trade and Technology Council encourages the coordination -which is a wording that goes beyond the concept of cooperation, and implies a greater level of joint work- within the GPAI. EU’s interest in India as a technological powerhouse has increased in recent years.

In some EU’s technology partnerships with regions and countries, there is no explicit reference to Artificial Intelligence, but the development of technological capacity-building in other verticals are an anteroom of future areas of interest that may arise in coming years. To give an example, the launch of the EU-LAC Digital Alliance in early 2023 focused on regulatory convergence, digital infrastructure, the role of satellites (Earth observation, technology solutions for hazardous climate responses), talent, R&I, data centers, data spaces, but less reference to AI.

In the case of the African continent, the wording “digital” has been present since the 2017 Sixth EU-Africa Business Forum, that highlighted the role of digital economy as a driver. The extension of digital topics has experienced an upsurge, on digital skills, broadband connectivity, cross-border backbone infrastructure, e-services, but limited references to AI. Digital for Development (D4D) Hubs in both Latin America and the Caribbean and Africa have set out workshops and training activities on Artificial Intelligence.

Overall, the reference to generative AI is still limited across the different global governance regimes, also in the case of EU’s international technology partnerships. This is a trend across all countries, regional organizations and international agencies. However, the launch of the first-ever United Nations High-Level Advisory Body on AI is a window of opportunity to plug generative AI into the discussion as a relevant issue to be tackled by the diverse group of representatives on a geographic and cultural basis.

A much-needed further policy discussion and framing on the impact of Generative AI on international affairs

Evidence shows that China and the United States are racing over the global competition on generative AI, although this is not a much explicit issue. However, their instruments of power differ: while China leads in intellectual property and patents, has a large number of generative AI start-ups and is building up a strong ecosystem of companies, the United States still leads the way in the sum of investments devoted to this technology vector, mostly through Venture Capital, which aims to invest in high-risk markets, which are featured by uncertainty and potential impact.

The impact of generative AI is three-pronged and introduces opportunities and challenges. In security, it touches on its military applications, the impact on intelligence community’s decision-making processes, and the growth of hybrid threats triggered by generative AI, such as deepfakes and foreign information manipulation interference (FIMI). In economy, it may lead to new cross-sector and cross-industry collaborations to get generative AI developed. At the same time, it may become an area of competition with similar trend patterns such as market concentration. In rights and global governance, main forums have started in these two last years –mostly in 2023– to include early discussion panels on the impact of generative AI. Some issues have been touched upon, such as the future of work and the impact on human rights. However, still a large level of development is required to provide policy discussions which are capable and comprehensive to settle down thorough principles, roadmaps, and action plans.

In this scenario, the European Union should play a relevant role. Evidence shows that, from the economic perspective, it lags with regards to large corporations and start-ups alike. Also, in terms of Venture Capital, it is missing the race and might fail to be on the top three worldwide. With regards to security and rights implications, the EU is moving forward, is covering generative AI under the umbrella of the AI Act with a three-level set of obligations and is contributing to discussions in international fora. Still, the EU’s bilateral technology partnerships with trusted countries and like-minded partners should deepen the conversation on generative AI. This is an opportunity to have a first-mover advantage in this area of foreign policy of technology, and also to agree on common principles and be more influential in the international agenda.

(*) This paper was originally published as a chapter of the PromethEUs’ Joint Publication ‘Artificial Intelligence: Opportunities, Risks and Regulation’, on 14 November 2023.

See also: La geopolítica de la IA generativa: implicaciones internacionales y el papel de la Unión Europea.

Image: Two AI GPU processors with a lot of chips around on a motherboard. Photo: Igor Omilaev (@omilaev).